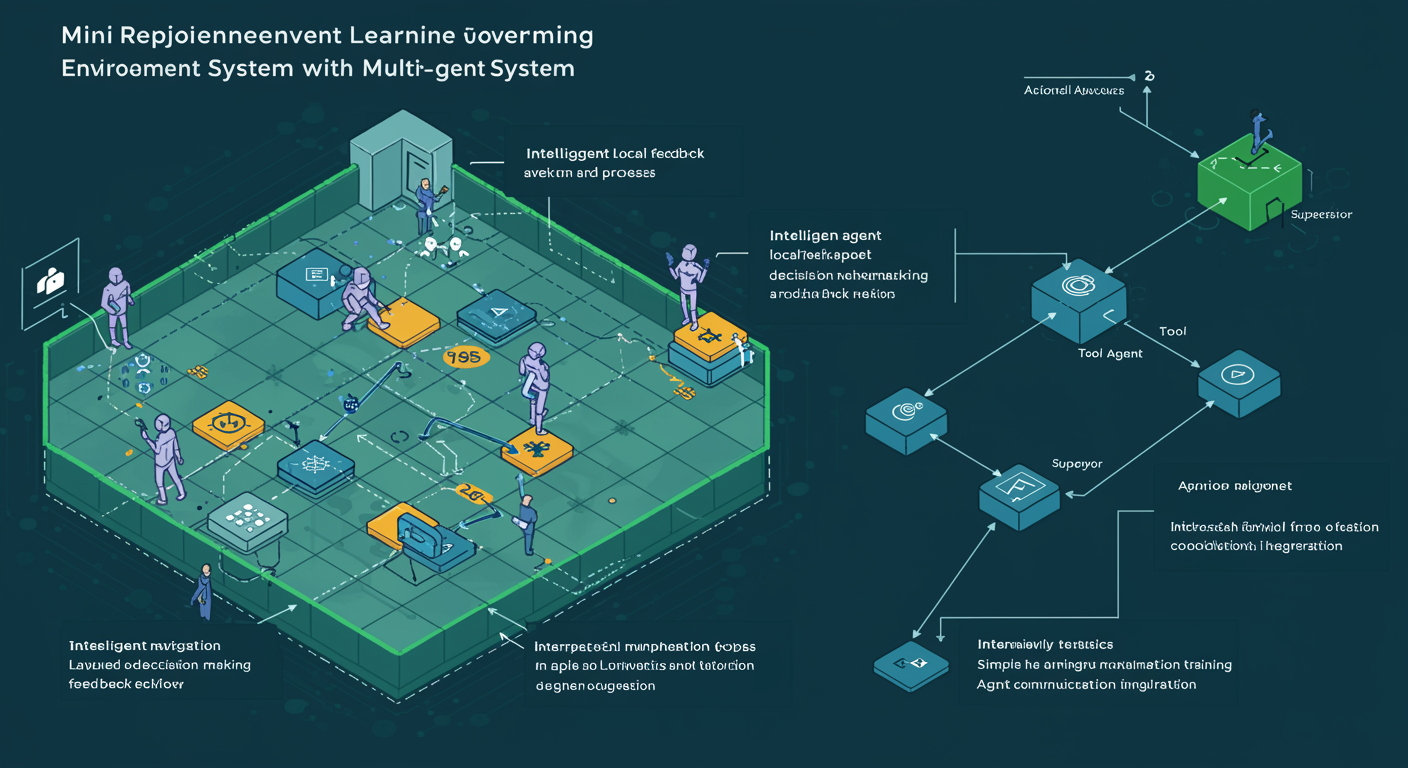

Desarrolladores han creado comprehensive tutorial para designing mini reinforcement learning environment con multi-agent system que learns a navigate grid world through interaction, feedback, y layered decision-making, building everything from scratch y bringing together three agent roles: Action Agent, Tool Agent, y Supervisor, enabling observation de how simple heuristics, analysis, y coordination patterns emerge en interactive learning scenarios, proporcionando practical foundation para understanding multi-agent reinforcement learning implementation desde basic principles hasta advanced coordination mechanisms.

El comprehensive tutorial establece practical framework para implementing multi-agent reinforcement learning systems desde foundational principles hasta advanced coordination.

La three-agent architecture (Action Agent, Tool Agent, Supervisor) provides clear role separation que enables specialized learning y coordination patterns.

Introducción

Para RL practitioners, el from-scratch implementation approach ensures deep understanding de underlying mechanisms rather than black-box usage.

El grid world navigation environment provides controlled testing ground para observing emergent behaviors y coordination strategies.

Para educational purposes, la layered decision-making approach demonstrates how complex behaviors emerge from simple agent interactions.

Detalles Clave

El intelligent local feedback mechanism enables agents a learn from immediate environment responses rather than delayed global rewards.

Para multi-agent research, la tutorial provides practical starting point para experimenting con different coordination strategies y communication protocols.

La adaptive decision-making component allows agents a modify strategies based en changing environment conditions y peer agent behaviors.

Impacto y Aplicaciones

Para AI development teams, el modular architecture enables easy extension y modification para different application domains.

El emphasis en simple heuristics demonstrates how effective behaviors can emerge without complex neural architectures.

Para reinforcement learning education, la hands-on tutorial approach provides concrete implementation experience rather than theoretical knowledge alone.

Conclusión

La multi-agent coordination aspect addresses critical challenge en distributed AI systems donde agents must collaborate effectively.

Para practical applications, el grid world foundation can be extended a more complex environments como robotics, game AI, o resource management.

El interactive learning approach through feedback loops demonstrates how agents can improve performance through environmental interaction.

Para research validation, la controlled environment enables systematic testing de different agent configurations y learning parameters.

La from-scratch building approach ensures complete understanding de system components y their interactions.

Para algorithm development, el modular design enables testing de different learning algorithms within same framework.

El observation de emergent behaviors provides insights into how coordination patterns develop naturally through agent interaction.

Para practical deployment, la tutorial establishes foundation que can be scaled up para real-world multi-agent applications.

La intelligent feedback mechanisms demonstrate how local information can guide global system behavior effectively.

Para system design, el three-role architecture provides template para organizing complex multi-agent systems con clear responsibilities.

El adaptive capabilities enable system a handle dynamic environments donde conditions change over time.

Para learning optimization, la layered decision-making approach enables different levels de abstraction en agent reasoning processes.

La practical implementation focus ensures que concepts translate directly a working code rather than remaining theoretical.

Para collaborative AI research, el coordination mechanisms provide foundation para studying how artificial agents can work together effectively.

Comprehensive tutorial para designing mini reinforcement learning environment ha created multi-agent system que learns navigate grid world through interaction feedback layered decision-making, building everything from scratch bringing together three agent roles (Action Agent Tool Agent Supervisor) enabling observation de how simple heuristics analysis coordination patterns emerge en interactive learning scenarios, proporcionando practical foundation para understanding multi-agent reinforcement learning implementation desde basic principles hasta advanced coordination mechanisms. Con practical multi-agent RL system implementation framework establishment desde foundational principles, clear specialized learning coordination pattern enablement through role separation, deep underlying mechanism understanding ensuring rather than black-box usage, controlled emergent behavior coordination strategy observation provision, complex behavior emergence demonstration from simple agent interactions, immediate environment response learning enablement rather than delayed global rewards, practical coordination strategy communication protocol experimentation starting point provision, changing condition peer behavior strategy modification allowance, easy extension modification enablement para different application domains, effective behavior emergence demonstration without complex neural architectures, concrete implementation experience provision rather than theoretical knowledge alone, critical distributed AI system collaboration challenge addressing, complex environment extension capability para robotics game AI resource management, performance improvement demonstration through environmental interaction, systematic agent configuration learning parameter testing enablement, complete system component interaction understanding ensuring, different learning algorithm testing enablement within same framework, coordination pattern natural development insight provision through agent interaction, real-world multi-agent application scaling foundation establishment, local information global system behavior guidance demonstration, complex multi-agent system organization template provision con clear responsibilities, dynamic environment condition change handling enablement, different abstraction level agent reasoning process enablement, working code direct concept translation ensuring rather than theoretical remaining, y artificial agent effective collaboration study foundation provision, esta innovation establishes new standard para practical multi-agent RL education. Para RL practitioners, educational purposes, multi-agent research, AI development teams, reinforcement learning education, practical applications, research validation, algorithm development, practical deployment, system design, learning optimization, collaborative AI research, esta innovation ensures deep understanding de underlying mechanisms rather than black-box usage, demonstrates how complex behaviors emerge from simple agent interactions, provides practical starting point para experimenting con different coordination strategies, enables easy extension y modification para different application domains, provides concrete implementation experience rather than theoretical knowledge alone, can be extended a more complex environments como robotics game AI o resource management, enables systematic testing de different agent configurations y learning parameters, enables testing de different learning algorithms within same framework, establishes foundation que can be scaled up para real-world multi-agent applications, provides template para organizing complex multi-agent systems con clear responsibilities, enables different levels de abstraction en agent reasoning processes, y provides foundation para studying how artificial agents can work together effectively across diverse multi-agent reinforcement learning applications y collaborative AI system development scenarios.